Introduction to the Qualcomm Neural Processing SDK for AI and its components

Machine learning on edge devices

Instead of running inference workloads in the cloud, the future belongs to apps that run them on edge devices like smartphones and drones.

The Qualcomm® Neural Processing SDK for AI is deep learning software for the Snapdragon® mobile platform. It allows developers to use heterogeneous computing capabilities on Snapdragon that are designed for running trained neural networks on edge devices — without the need for a connection to the cloud.

The SDK helps accomplish the following three tasks, essential to working with machine learning (ML) and artificial intelligence (AI) on mobile:

- Converting trained models from TensorFlow and Open Neural Network Exchange format (ONNX) to the Snapdragon-supported .dlc (Deep Learning Container) format

- Moving the workload of ML inference from the cloud to edge devices running the Snapdragon platform

- Running workloads on the appropriate Snapdragon compute cores (CPU, Qualcomm® Adreno™ GPU and Qualcomm® Hexagon™ DSP) inside those edge devices

Here are the basics of the SDK.

What’s in the Qualcomm Neural Processing SDK for AI?

- Android and Linux runtimes for neural network model execution

- Acceleration support for Hexagon DSP, Adreno GPU and Qualcomm® Kryo™ CPU

- Support for models in ONNX and TensorFlow formats

- APIs for controlling loading, executing and scheduling on the runtimes

- Java library for Android integration

- C++ and python libraries to help with implementation and usage of various machine learning models

- Access to SDK tools for debugging and benchmarking. Different types of tools found in the SDK are:

- Tools to load the operational data for models

- Desktop tools that convert models to the .dlc format

- Benchmarking and throughput calculation tools

What is a Deep Learning Container (DLC)?

Machine learning frameworks have specific formats for various models. The Qualcomm Neural Processing SDK converts them into a common format called Deep Learning Container (DLC). The conversion output also consists of information about supported and unsupported layers of the network, so that developers can design the initial model accordingly.

Workflow

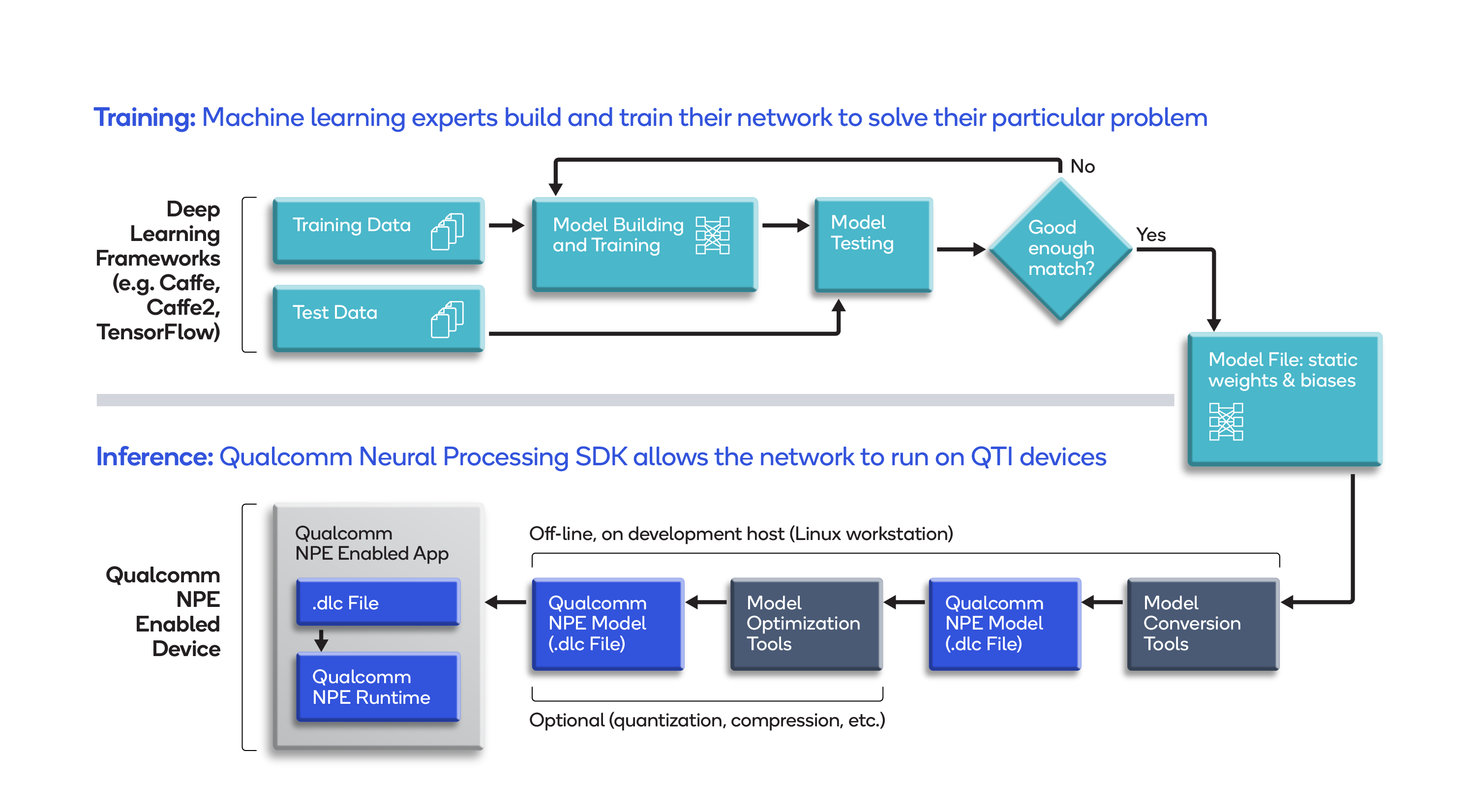

The Qualcomm Neural Processing SDK is designed to help developers build applications that use ONNX or TensorFlow models on Snapdragon platforms by converting the trained models into DLC-formatted files for inference, as shown in the image below.

Software operation flow

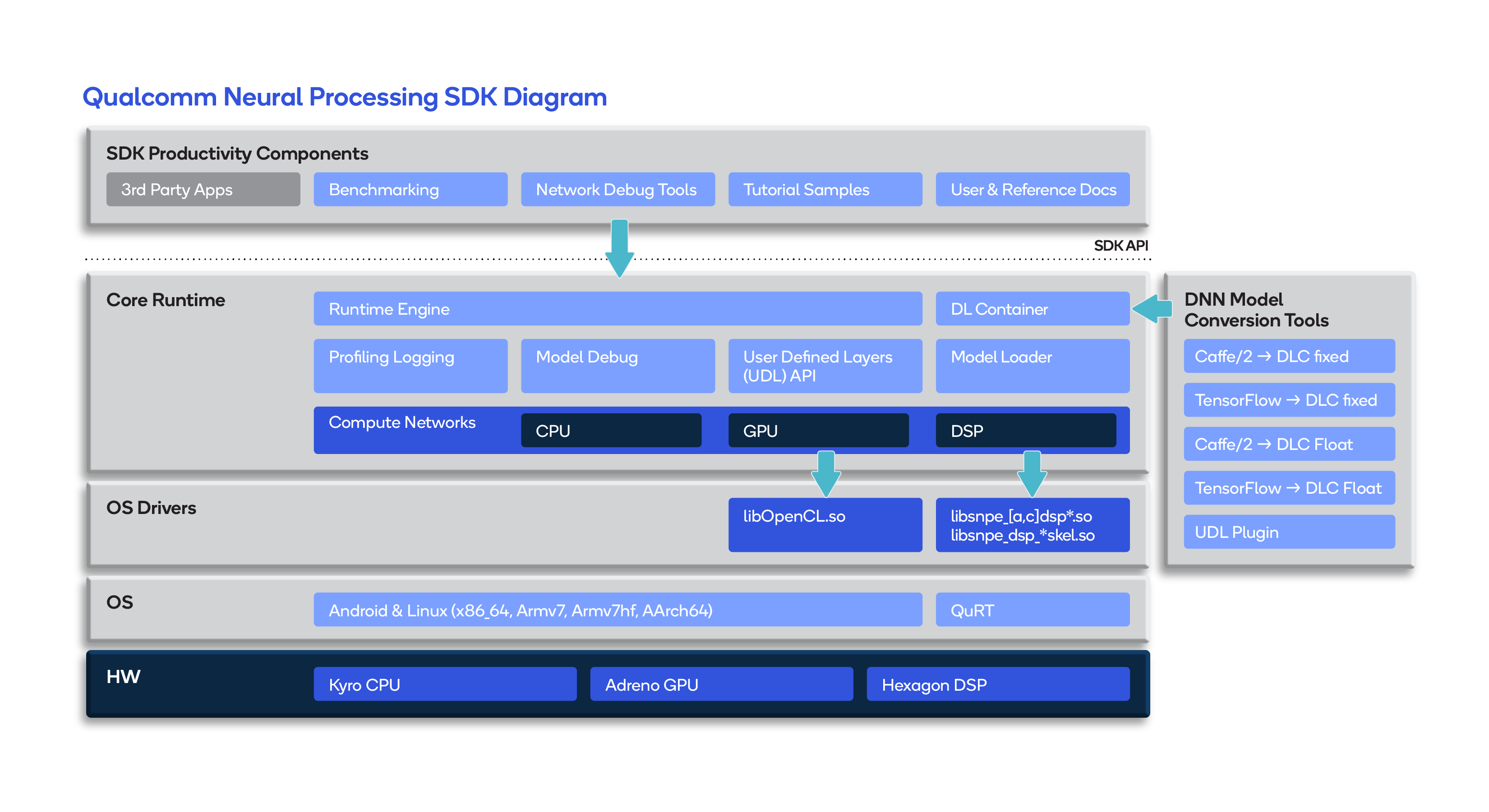

The figure below represents the software operation flow of the SDK.

With its user-defined layer (UDL) API, the SDK allows developers to customize the application according to the problem statement. The following figure shows an overview of the modifications needed to support user-defined layers that are unknown to the Qualcomm® Neural Processing Engine (NPE) runtime and converters.

Qualcomm Neural Processing SDK, Snapdragon, Qualcomm Neural Processing Engine, Qualcomm Adreno, Qualcomm Kryo and Qualcomm Hexagon are products of Qualcomm Technologies, Inc. and/or its subsidiaries.