Artificial Intelligence

AI is changing everything. Combined with powerful, energy-efficient processors and ubiquitous connectivity to the wireless edge, intelligence is moving to more devices, changing industries, and inventing new experiences. Our Qualcomm AI Stack provides an end-to-end AI software offering that combines Qualcomm AI software capabilities within one unified software stack to support multiple product lines.

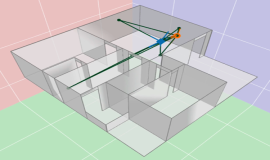

On-device AI allows for real-time responsiveness, improved privacy, and enhanced reliability along with better overall performance and with or without a network connection. Our Qualcomm Artificial Intelligence (AI) Engine along with our AI Software and Hardware tools (including our Qualcomm® Neural Processing SDK for AI) as outlined below, are designed to accelerate your on-device AI-enabled applications and experiences.

Snapdragon and Qualcomm branded products are products of Qualcomm Technologies, Inc. and/or its subsidiaries.

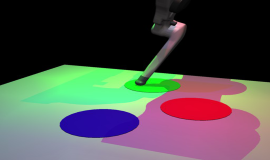

Qualcomm AI Research works to advance AI and make its core capabilities – perception, reasoning, and action – ubiquitous across devices. The goal is to make breakthroughs in fundamental AI research and scale them across industries. One way we contribute innovative and impactful AI research to the rest of the community is through novel papers at academic conferences.

Beyond papers, the Qualcomm Innovation Center (QuIC) actively contributes code based on this breakthrough research to open source projects.

The AI Model Efficiency Toolkit (AIMET) is a library that provides advanced quantization and compression techniques for trained neural network models. QuIC open sourced AIMET on GitHub to collaborate with other leading AI researchers, provide a simple library plugin for AI developers, and help migrate the ecosystem toward integer inference. Read the blog post or watch some informational AIMET videos to learn more.

The AIMET Model Zoo, another GitHub project, provides the recipe for quantizing popular 32-bit floating point (FP32) models to 8-bit integer (INT8) models with little loss in accuracy. Read the blog post to learn more. Check out the Qualcomm Innovation Center YouTube channel for informational videos on our open source projects to help developers get started.

Data is another crucial element for machine learning. If you need the Qualcomm Abstract Syntax Tree (QAST) dataset that was used to support the experiments in our workshop paper at ICLR 2019: Simulating Execution Time of Tensor Programs Using Graph Neural Networks, check out our QAST Project Page. We hope this new dataset will benefit the graph research community and raise interest in Optimizing Compiler research.