Streaming Live Frames to a Machine Learning Model with the Qualcomm Neural Processing SDK for AI

Steps for using feeds from front and rear cameras for prediction

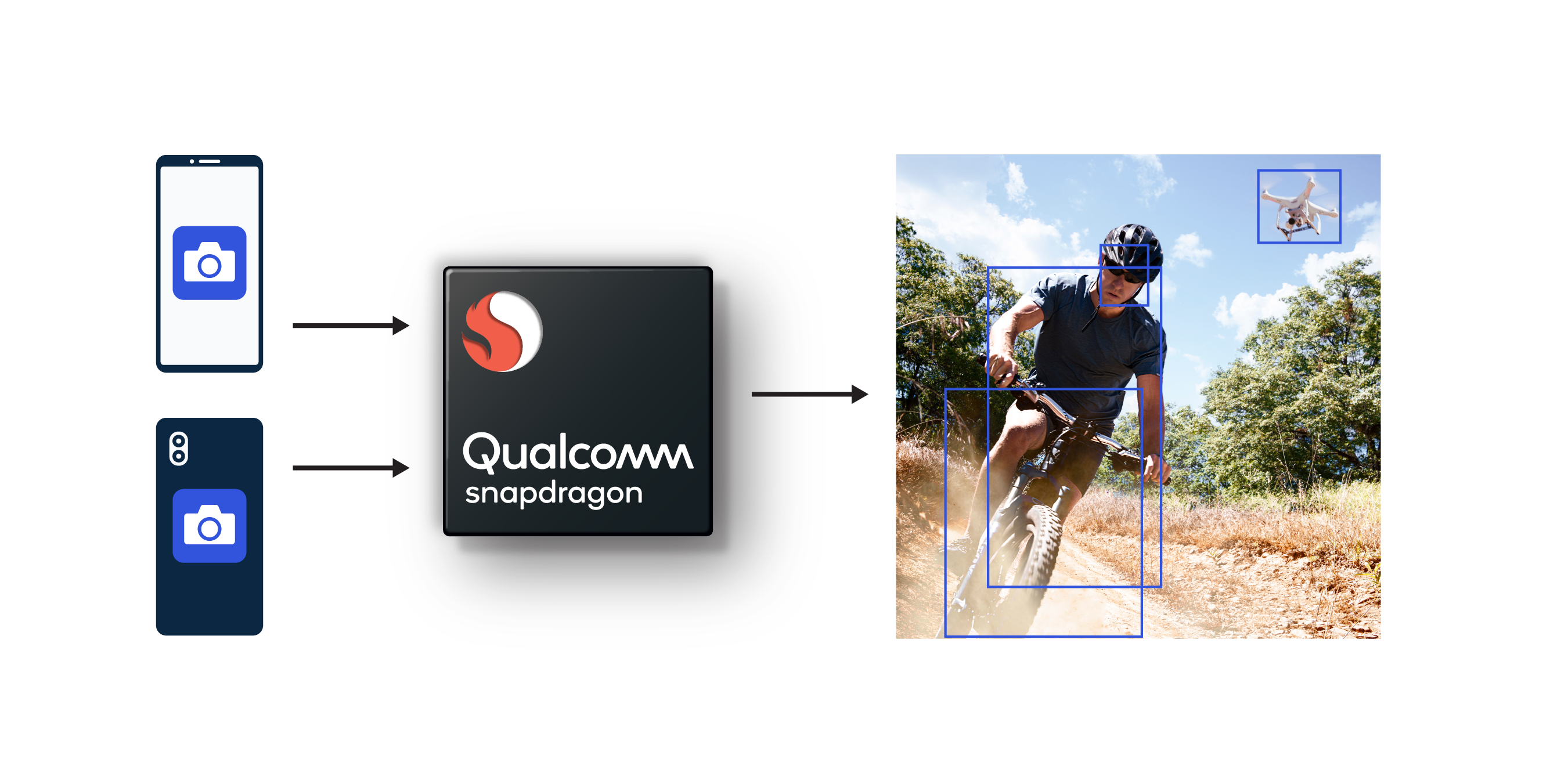

Following the steps below, you can set up a multi-camera stream with model prediction on a device running a Snapdragon® mobile platform.

Using live frames in the model

The procedure streams live frames to the machine learning model through front and rear cameras simultaneously using Camera2 APIs from the Android SDK. Then, it converts the live frames to bitmaps and hands them off to the model, running on a Snapdragon processor, for prediction.

Finally, the model classifies the bitmap images and passes in predictions in the form of labels, such as “drone” and “man on bike,” as shown in the image.

As a prerequisite, you should have working experience in developing Android Applications.

1. Setting up the SDK

If you have not yet set up our Neural Processing SDK, download it from the Tools & Resources page on Qualcomm Developer Network. On our Getting Started page, you can also find system requirements and instructions for setting up the SDK, converting models and building the sample Android application in the Qualcomm® Neural Processing SDK for AI. (Note that Android NDK version r-11 is required for proper SDK setup.)

2. Setting runtime permissions

Camera requires runtime permission to capture the live frames. It is a requirement of Android 6.0 (API >23).

In the Android project, declare the following in the AndroidManifest.xml file:

<uses-permission android:name="android.permission.CAMERA"/>The same camera permission has to be set as runtime in the code:

if (ContextCompat.checkSelfPermission(AppContext,Manifest.permission.CAMERA) !=PackageManager.PERMISSION_GRANTED) { // Grant Camera Permission

}3. Loading the Model

The following code connects to the neural network and loads the model:

final SNPE.NeuralNetworkBuilder builder = new

SNPE.NeuralNetworkBuilder(mApplicationContext)

// Allows selecting a runtime order for the network.

// In the example below use DSP and fall back, in order, to GPU then CPU

// depending on whether any of the runtimes are available.

.setRuntimeOrder(DSP, GPU, CPU)

// Loads a model from DLC file

.setModel(new File(""))

// Build the network

network = builder.build(); 4. Capturing preview frames

The interface TextureView.SurfaceTextureListener can be used to render the camera preview and notify when the surface texture associated with this texture view is available. The overridden methods are as follows:

a. public abstract void onSurfaceTextureAvailable (SurfaceTexture surface, int width, int height)

That method is invoked when the surface texture of a TextureView is ready for use. Front and rear camera connections are opened by giving camera Ids 1 and 0 respectively through CameraManager.

b. public void onSurfaceTextureSizeChanged (SurfaceTexture surfaceTexture, int i, int i1)

That method is invoked when the size of buffers in SurfaceTexture changes.

c. public void onSurfaceTextureUpdated (SurfaceTexture surfaceTexture)

That method is invoked when the specified SurfaceTexture is updated through SurfaceTexture.updateTexImage().

For Camera callbacks, CameraDevice.StateCallback is used for receiving updates about the state of a camera device. The overridden methods are as follows:

a. public void onClosed (CameraDevice camera)

That method is called when a camera device has been closed.

b. public void onOpened (CameraDevice cameraDevice)

That method is called when a camera device has finished opening. At this point, the camera device is ready to use, and can be called to set up the first capture.

c. public void onDisconnected (CameraDevice cameraDevice)

That method is called when a camera device is no longer available for use.

d. public void onError (CameraDevice cameraDevice, int i)

That method is called when a camera device has encountered a serious error.

To track the progress of camera capture, the CameraCaptureSession.CaptureCallback method is used. The callbacks are as follows:

e. onCaptureProgressed

That method is called when an image capture makes partial forward progress; some (but not all) results from an image capture are available.

public void onCaptureProgressed (CameraCaptureSession session, CaptureRequest request, CaptureResult partialResult)f. onCaptureCompleted

That method is called when an image capture has fully completed and all the result metadata is available.

public void onCaptureCompleted (CameraCaptureSession session, CaptureRequest request, TotalCaptureResult result)TotalCaptureResult parameter is produced by a CameraDevice after processing a CaptureRequest. TotalCaptureResult returns the final values of the capture.

5. Getting the bitmap from TextureView

A bitmap of fixed height and width can be obtained from TextureView in an onCaptureCompleted callback. That bitmap can be compressed and used to classify the image.

6. Classifying the image

Before the bitmap is handed off for prediction, it must undergo basic image processing, such as conversion to RGB, grayscale, etc. The image processing depends on the input shape required by the model. Examples:

- Inception network input image size: 299x299x3 (3 channel image input)

- MobileNet input image size: 224x224x3 (3 channel image input)

- VGG16/19 input image size: 224x224x3 (3 channel image input)

- fer2013 network input: 48x48x1 (1 channel image input)

Next, it is necessary to convert the processed image into the tensor. The prediction API requires a tensor format with type Float.

After all the processing is complete, the tensor goes to the neural network API for prediction, as shown in the following code:

// mNeuralNetwork is instance of NeuralNetwork class.

final Map outputs = mNeuralNetwork.execute(inputs); where NeuralNetwork is an instance of the NeuralNetwork class, and inputs is a map of input name and tensor.

Next step

Qualcomm Developer Network is a source for other projects that use the Qualcomm Neural Processing SDK for AI. Developers can use the projects as prototypes in their own development efforts.

Snapdragon and Qualcomm Neural Processing SDK are products of Qualcomm Technologies, Inc. and/or its subsidiaries.