Classification, Object Detection and Image Segmentation

Attaining deeper understanding of images for machine learning models

Some computer vision models can detect objects, determine their shape and predict the direction in which they will travel. For example, such models are at work in self-driving cars. Three important tasks undertaken by computer vision are classification, object detection and image segmentation.

Classification

Classification is a machine learning task for determining which objects are in an image or video. It refers to training machine learning models with the intent of finding out which classes (objects) are present. Classification is useful at the yes-no level of deciding whether an image contains an object/anomaly or not.

A separate task from classification is localization, or determining the position of the classified objects in the image or video.

Object detection

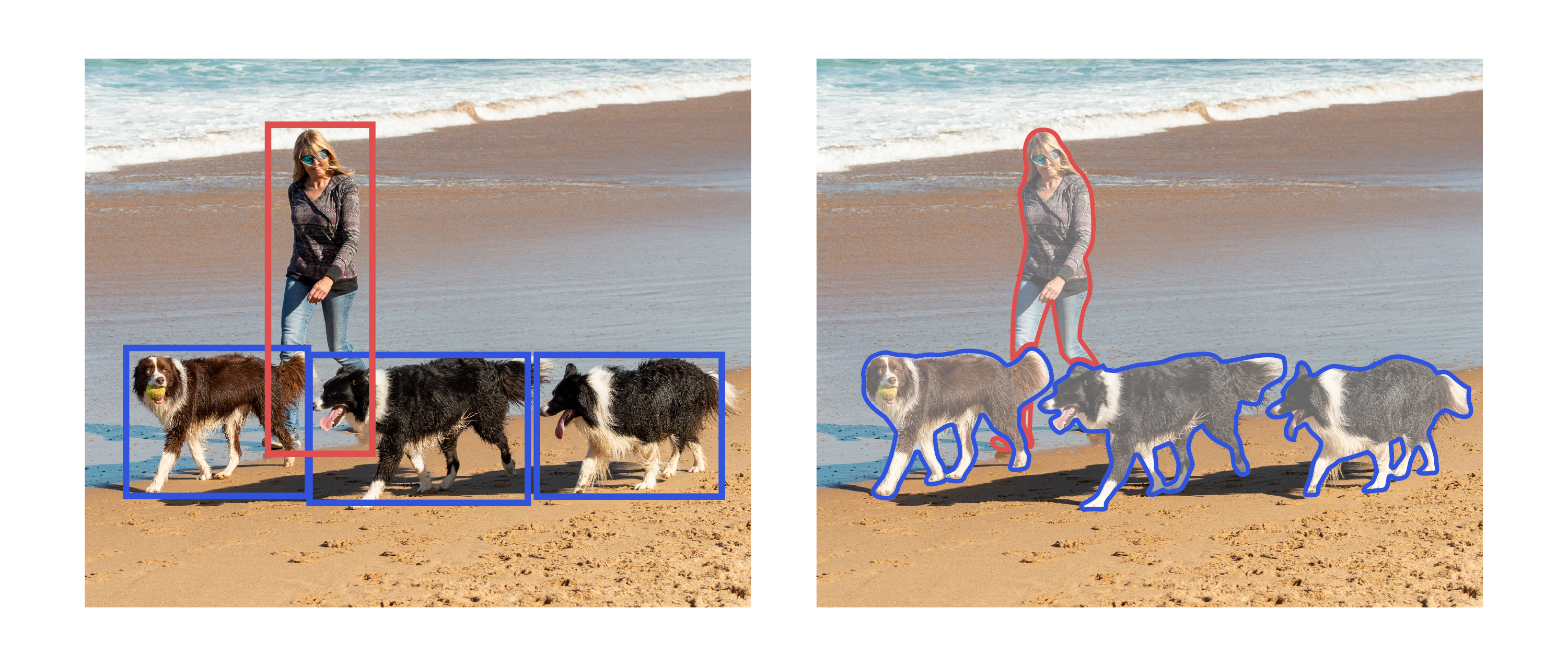

Object detection combines classification and localization to determine what objects are in the image or video and specify where they are in the image. It applies classification to distinct objects and uses bounding boxes, as shown below.

Object detection is useful in identifying objects in an image or video. Below, the image on the left illustrates classification, in which the classes Donut and Coffee are identified. The image on the right illustrates object detection by surrounding the members of each class — donut and coffee — with a bounding box.

Use cases for object detection include facial detection with any post-detection analysis; for example, expression detection, age estimation or drowsiness detection. Many real-time object detection applications exist for traffic management, such as vehicle detection systems based on traffic scenes.

As described above, the most popular approaches to computer vision are classification and object detection to identify objects present in an image and specify their position. But many use cases call for analyzing images at a lower level than that. That is where image segmentation comes in.

Image segmentation

Any image consists of both useful and useless information, depending on the user’s interest. Image segmentation separates an image into regions, each with its particular shape and border, delineating potentially meaningful areas for further processing, like classification and object detection. The regions may not take up the entire image, but the goal of image segmentation is to highlight foreground elements and make it easier to evaluate them. Image segmentation provides pixel-by-pixel details of an object, making it different from classification and object detection.

Below, the image on the left illustrates object detection, highlighting only the location of the objects. The image on the right illustrates image segmentation, showing pixel-by-pixel outlines of the objects.

Image segmentation algorithms

Image segmentation techniques use different algorithms.

| Algorithm | Description |

|---|---|

| Edge Detection Segmentation | Makes use of discontinuous local features of an image to detect edges and hence define a boundary of the object. |

| Mask R-CNN | Gives three outputs for each object in the image: its class, bounding box coordinates, and object mask |

| Segmentation based on Clustering | Divides the pixels of the image into homogeneous clusters. |

| Region-Based Segmentation | Separates the objects into different regions based on threshold value(s). |

To solve segmentation problems in a given domain, it is usually necessary to combine algorithms and techniques with specific knowledge of the domain.

(For a comprehensive look at image segmentation, read Image Segmentation Algorithms Overview by Song Yuheng and Yan Hao.)