Overview

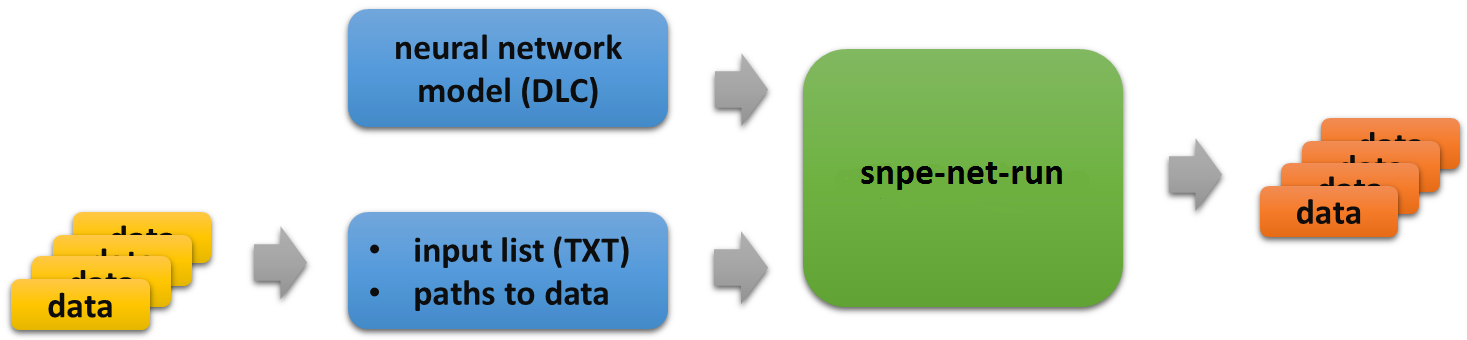

The example C++ application in this tutorial is called snpe-net-run. It is a command line executable that executes a neural network using SNPE SDK APIs.

The required arguments to snpe-net-run are:

- A neural network model in the DLC file format

- An input list file with paths to the input data.

Optional arguments to snpe-net-run are:

- Choice of GPU, DSP or AIP runtimes (default is CPU)

- Output directory (default is ./output)

- Show help description

snpe-net-run creates and populates an output directory with the results of executing the neural network on the input data.

The SNPE SDK provides Linux and Android binaries of snpe-net-run under

- $SNPE_ROOT/bin/x86_64-linux-clang

- $SNPE_ROOT/bin/arm-android-clang6.0

- $SNPE_ROOT/bin/aarch64-android-clang6.0

- $SNPE_ROOT/bin/aarch64-oe-linux-gcc6.4

- $SNPE_ROOT/bin/arm-oe-linux-gcc6.4hf

Prerequisites

- The SNPE SDK has been set up following the SNPE Setup chapter.

- The Tutorials Setup has been completed.

- TensorFlow is installed (see TensorFlow Setup)

Introduction

The Inception v3 Imagenet classification model is trained to classify images with 1000 labels.

The examples below shows the steps required to execute a pretrained optimized and optionally quantized Inception v3 model with snpe-net-run to classify a set of sample images. An optimized and quantized model is used in this example to showcase the DSP and AIP runtimes which execute quantized 8-bit neural network models.

The DLC for the model used in this tutorial was generated and optimized using the TensorFlow optimizer tool, during the Getting Inception v3 portion of the Tutorials Setup, by the script $SNPE_ROOT/models/inception_v3/scripts/setup_inceptionv3.py. Additionally, if a fixed-point runtime such as DSP or AIP was selected when running the setup script, the model was quantized by snpe-dlc-quantize.

Learn more about a quantized model.

Run on Linux Host

Go to the base location for the model and run snpe-net-run

cd $SNPE_ROOT/models/inception_v3 snpe-net-run --container dlc/inception_v3_quantized.dlc --input_list data/cropped/raw_list.txt

After snpe-net-run completes, the results are populated in the $SNPE_ROOT/models/inception_v3/output directory. There should be one or more .log files and several Result_X directories, each containing a softmax:0.raw file.

One of the inputs is data/cropped/handicap_sign.raw and it was created from data/cropped/handicap_sign.jpg which looks like the following:

With this input, snpe-net-run created the output file $SNPE_ROOT/models/inception_v3/output/Result_0/softmax:0.raw. It holds the output tensor data of 1000 probabilities for the 1000 categories. The element with the highest value represents the top classification. A python script to interpret the classification results is provided and can be used as follows:

python3 $SNPE_ROOT/models/inception_v3/scripts/show_inceptionv3_classifications.py -i data/cropped/raw_list.txt \

-o output/ \

-l data/imagenet_slim_labels.txt

The output should look like the following, showing classification results for all the images.

Classification results <input_files_dir>/trash_bin.raw 0.850245 413 ashcan <input_files_dir>/plastic_cup.raw 0.972899 648 measuring cup <input_files_dir>/chairs.raw 0.286483 832 studio couch <input_files_dir>/handicap_sign.raw 0.430206 920 street sign <input_files_dir>/notice_sign.raw 0.138858 459 brass

Note: The <input_files_dir> above maps to a path such as $SNPE_ROOT/models/inception_v3/data/cropped/

The output shows the image was classified as "street sign" (index 932 of the labels) with a probability of 0.383475. The rest of the output can be examined to see the model's classification on other images.

Binary data input

Note that the Inception v3 image classification model does not accept jpg files as input. The model expects its input tensor dimension to be 299x299x3 as a float array. The scripts/setup_inception_v3.py script performs a jpg to binary data conversion by calling scripts/create_inception_v3_raws.py. The scripts are an example of how jpg images can be preprocessed to generate input for the Inception v3 model.

Run on Android Target

Select target architecture

SNPE provides Android binaries for armeabi-v7a and arm64-v8a architectures. For each architecture, there are binaries compiled with clang6.0 using libc++ STL implementation. The following shows the commands to select the desired binaries:

# architecture: armeabi-v7a - compiler: clang - STL: libc++ export SNPE_TARGET_ARCH=arm-android-clang6.0 export SNPE_TARGET_STL=libc++_shared.so # architecture: arm64-v8a - compiler: clang - STL: libc++ export SNPE_TARGET_ARCH=aarch64-android-clang6.0 export SNPE_TARGET_STL=libc++_shared.so

For simplicity, this tutorial sets the target binaries to arm-android-clang6.0, which use libc++_shared.so, for commands on host and on target.

Push binaries to target

Push SNPE libraries and the prebuilt snpe-net-run executable to /data/local/tmp/snpeexample on the Android target.

export SNPE_TARGET_ARCH=arm-android-clang6.0

export SNPE_TARGET_STL=libc++_shared.so

adb shell "mkdir -p /data/local/tmp/snpeexample/$SNPE_TARGET_ARCH/bin"

adb shell "mkdir -p /data/local/tmp/snpeexample/$SNPE_TARGET_ARCH/lib"

adb shell "mkdir -p /data/local/tmp/snpeexample/dsp/lib"

adb push $SNPE_ROOT/lib/$SNPE_TARGET_ARCH/$SNPE_TARGET_STL \

/data/local/tmp/snpeexample/$SNPE_TARGET_ARCH/lib

adb push $SNPE_ROOT/lib/$SNPE_TARGET_ARCH/*.so \

/data/local/tmp/snpeexample/$SNPE_TARGET_ARCH/lib

adb push $SNPE_ROOT/lib/dsp/*.so \

/data/local/tmp/snpeexample/dsp/lib

adb push $SNPE_ROOT/bin/$SNPE_TARGET_ARCH/snpe-net-run \

/data/local/tmp/snpeexample/$SNPE_TARGET_ARCH/bin

Set up enviroment variables

Set up the library path, the path variable, and the target architecture in adb shell to run the executable with the -h argument to see its description.

adb shell export SNPE_TARGET_ARCH=arm-android-clang6.0 export LD_LIBRARY_PATH=$LD_LIBRARY_PATH:/data/local/tmp/snpeexample/$SNPE_TARGET_ARCH/lib export PATH=$PATH:/data/local/tmp/snpeexample/$SNPE_TARGET_ARCH/bin snpe-net-run -h exit

Push model data to Android target

To execute the Inception v3 classification model on Android target follow these steps:

cd $SNPE_ROOT/models/inception_v3 mkdir data/rawfiles && cp data/cropped/*.raw data/rawfiles/ adb shell "mkdir -p /data/local/tmp/inception_v3" adb push data/rawfiles /data/local/tmp/inception_v3/cropped adb push data/target_raw_list.txt /data/local/tmp/inception_v3 adb push dlc/inception_v3_quantized.dlc /data/local/tmp/inception_v3 rm -rf data/rawfiles

Note: It may take some time to push the Inception v3 dlc file to the target.

Running on Android using CPU Runtime

The Android C++ executable is run with the following commands:

adb shell export SNPE_TARGET_ARCH=arm-android-clang6.0 export LD_LIBRARY_PATH=$LD_LIBRARY_PATH:/data/local/tmp/snpeexample/$SNPE_TARGET_ARCH/lib export PATH=$PATH:/data/local/tmp/snpeexample/$SNPE_TARGET_ARCH/bin cd /data/local/tmp/inception_v3 snpe-net-run --container inception_v3_quantized.dlc --input_list target_raw_list.txt exit

The executable will create the results folder: /data/local/tmp/inception_v3/output. To pull the output:

adb pull /data/local/tmp/inception_v3/output output_android

Check the classification results by running the following python script:

python3 scripts/show_inceptionv3_classifications.py -i data/target_raw_list.txt \

-o output_android/ \

-l data/imagenet_slim_labels.txt

The output should look like the following, showing classification results for all the images.

Classification results cropped/trash_bin.raw 0.850245 413 ashcan cropped/plastic_cup.raw 0.972899 648 measuring cup cropped/chairs.raw 0.286483 832 studio couch cropped/handicap_sign.raw 0.430207 920 street sign cropped/notice_sign.raw 0.138857 459 brass

Running on Android using DSP Runtime

Try running on an Android target with the --use_dsp option as follows:

Note the extra environment variable ADSP_LIBRARY_PATH must be set to use DSP. (See DSP Runtime Environment for details.)

adb shell export SNPE_TARGET_ARCH=arm-android-clang6.0 export LD_LIBRARY_PATH=$LD_LIBRARY_PATH:/data/local/tmp/snpeexample/$SNPE_TARGET_ARCH/lib export PATH=$PATH:/data/local/tmp/snpeexample/$SNPE_TARGET_ARCH/bin export ADSP_LIBRARY_PATH="/data/local/tmp/snpeexample/dsp/lib;/system/lib/rfsa/adsp;/system/vendor/lib/rfsa/adsp;/dsp" cd /data/local/tmp/inception_v3 snpe-net-run --container inception_v3_quantized.dlc --input_list target_raw_list.txt --use_dsp exit

Pull the output into an output_android_dsp directory.

adb pull /data/local/tmp/inception_v3/output output_android_dsp

Check the classification results by running the following python script:

python3 scripts/show_inceptionv3_classifications.py -i data/target_raw_list.txt \

-o output_android_dsp/ \

-l data/imagenet_slim_labels.txt

The output should look like the following, showing classification results for all the images.

Classification results cropped/trash_bin.raw 0.639935 413 ashcan cropped/plastic_cup.raw 0.937354 648 measuring cup cropped/chairs.raw 0.275142 832 studio couch cropped/handicap_sign.raw 0.134832 920 street sign cropped/notice_sign.raw 0.258279 459 brass

Classification results are identical to the run with CPU runtime, but there are differences in the probabilities associated with the output labels due to floating point precision differences.

Running on Android using AIP Runtime

The AIP runtime allows you to run the Inception v3 model on the HTA.

Running the model using the AIP runtime requires setting the --runtime argument as 'aip' in the script $SNPE_ROOT/models/inception_v3/scripts/setup_inceptionv3.py to allow HTA-specific metadata to be packed into the DLC that is required by the AIP runtime.

Refer to Getting Inception v3 for more details.

Other than that the additional settings for AIP runtime are quite similar to those for the DSP runtime.

Try running on an Android target with the --use_aip option as follows:

Note the extra environment variable ADSP_LIBRARY_PATH must be set to use DSP. (See DSP Runtime Environment for details.)

adb shell export SNPE_TARGET_ARCH=arm-android-clang6.0 export LD_LIBRARY_PATH=$LD_LIBRARY_PATH:/data/local/tmp/snpeexample/$SNPE_TARGET_ARCH/lib export PATH=$PATH:/data/local/tmp/snpeexample/$SNPE_TARGET_ARCH/bin export ADSP_LIBRARY_PATH="/data/local/tmp/snpeexample/dsp/lib;/system/lib/rfsa/adsp;/system/vendor/lib/rfsa/adsp;/dsp" cd /data/local/tmp/inception_v3 snpe-net-run --container inception_v3_quantized.dlc --input_list target_raw_list.txt --use_aip exit

Pull the output into an output_android_aip directory.

adb pull /data/local/tmp/inception_v3/output output_android_aip

Check the classification results by running the following python script:

python scripts/show_inceptionv3_classifications.py -i data/target_raw_list.txt \

-o output_android_aip/ \

-l data/imagenet_slim_labels.txt

The output should look like the following, showing classification results for all the images.

Classification results cropped/trash_bin.raw 0.683813 413 ashcan cropped/plastic_cup.raw 0.971473 648 measuring cup cropped/chairs.raw 0.429178 832 studio couch cropped/handicap_sign.raw 0.338605 920 street sign cropped/notice_sign.raw 0.154364 459 brass

Classification results are identical to the run with CPU runtime, but there are differences in the probabilities associated with the output labels due to floating point precision differences.