SNPE SDK supports a Tensorflow version and a Caffe version of MobilenetSSD model. See Using MobilenetSSD for more details.

Benchmarking Mobilenet SSD requires a few additions to the benchmark JSON configuration file and also the data input list.

Follow the benchmark overview to familiarize yourself with the benchmarking tool.

This tutorial assumes the following example files are created in:

- /tmp/mobilenetssd.dlc - the converted neural network model file

- /tmp/mobilenetssd.json - benchmark configuration file

- /tmp/imagelist.txt - list of raw image paths (one per line)

- /tmp/images - directory containing images specified in imagelist.txt above

The Mobilenet SSD benchmark configuration file contains the same entries as defined in benchmark overview with one additional parameter. Create or edit the /tmp/mobilenetssd.json file and add a line to indicate use of the CpuFallback option. This option is required to enable the Mobilenet SSD benchmark to execute properly.

The JSON file should look similar to this:

{

"Name":"mobilenet_ssd",

"HostRootPath": "mobilenet_ssd",

"HostResultsDir":"mobilenet_ssd/results",

"DevicePath":"/data/local/tmp/snpebm",

"Devices":["454d40f3"],

"Runs":2,

"Model": {

"Name": "mobilenet_ssd",

"Dlc": "/tmp/mobilenet_ssd.dlc",

"InputList": "/tmp/imagelist.txt",

"Data": [

"/tmp/images"

]

},

"Runtimes":["GPU"],

"Measurements": ["timing"],

"CpuFallback": true,

"BufferTypes": ["ub_float","ub_tf8"],

"ProfilingLevel": "detailed"

}Tensorflow Mobilenet SSD model

Tensorflow Mobilenet SSD has more than one output layer. To accurately represent the outputs in the benchmark run the additional outputs need to be specified as part of /tmp/imagelist.txt.

The output layers for the model are:

- Postprocessor/BatchMultiClassNonMaxSuppression

- add_6

Add the output layer names on the first line of the image list file (/tmp/imagelist.txt) starting with '#' and separating each layer name with a space. For example,

#Postprocessor/BatchMultiClassNonMaxSuppression add_6 tmp/0#.rawtensor tmp/1#.rawtensor

NOTE: If the model is retrained and the output layers change, the first line in imagelist.txt must be updated to reflect these changes.

Caffe Mobilenet SSD model

Caffe Mobilenet SSD normally has one output layer (e.g. detection_out). In the case it has more than one output layer, to accurately represent the outputs in the benchmark run, the additional outputs need to be specified as part of /tmp/imagelist.txt.

Add the output layer names on the first line of the image list file (/tmp/imagelist.txt) starting with '#' and separating each layer name with a space. For example,

#a/detection_out b/detection_out tmp/0#.rawtensor tmp/1#.rawtensor

Running benchmark

Run the benchmark via

python3 snpe_bench.py -c /tmp/mobilenetssd.json -a

Viewing the Results (csv File or json File)

All results are stored in the "HostResultDir" that is specified in the configuration json file. The benchmark creates time-stamped directories for each benchmark run. All timing results are stored in microseconds.

For your convenience, a "latest_results" link is created that always points to the most recent run.

# In mobilenetssd.json, "HostResultDir" is set to "mobilenet_ssd/results" cd $SNPE_ROOT/benchmarks/mobilenet_ssd/results # Notice the time stamped directories and the "latest_results" link. cd $SNPE_ROOT/benchmarks/mobilenet_ssd/results/latest_results # Notice the .csv file, open this file in a csv viewer (Excel, LibreOffice Calc) # Notice the .json file, open the file with any text editor

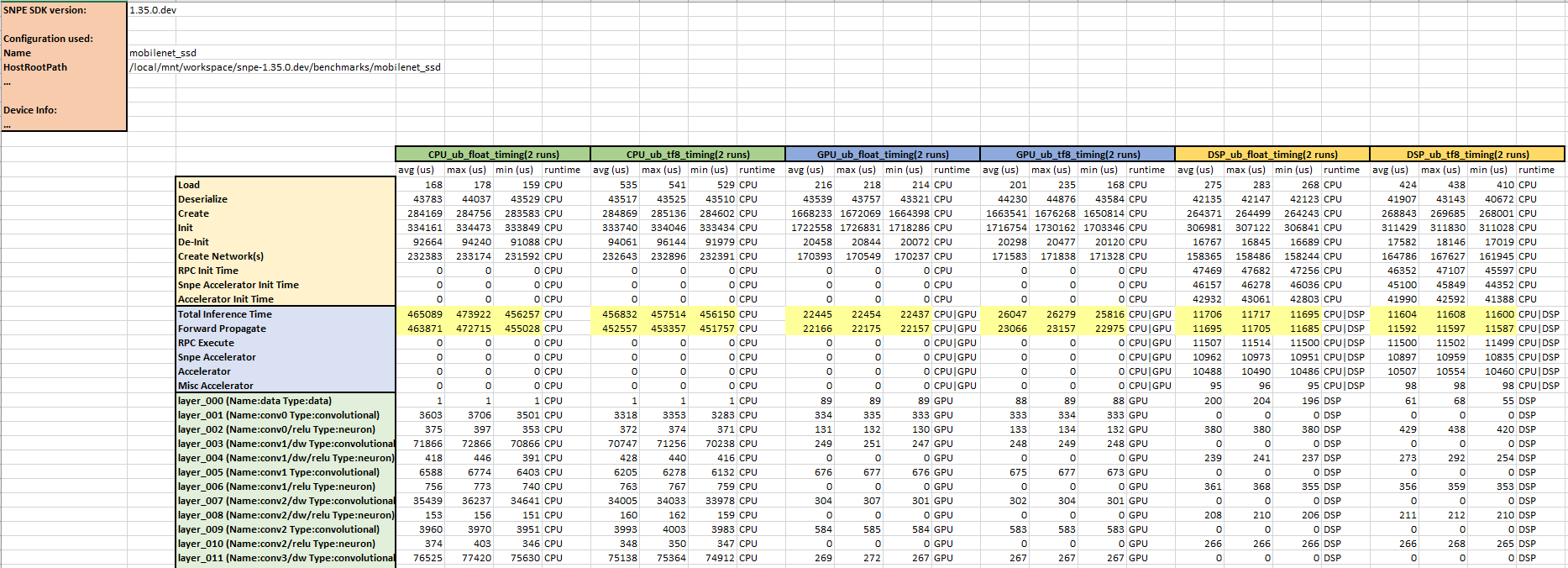

CSV Benchmark Results File

The CSV file contains results similar to the example below. Some measurements may not appear in the CSV file. To get all timing information, the profiling level needs to be set to detailed. By default, the profiling level is basic. Note that colored headings have been added for clarity.

A more detailed description of the CSV result file can be found in benchmark overview.

Comparing the Total Inference Time and Forward Propagate rows, we find that GPU and DSP inference times are faster than the CPU by about 17~39x. Using GPU and DSP for MobilenetSSD inferencing may achieve higher performance.

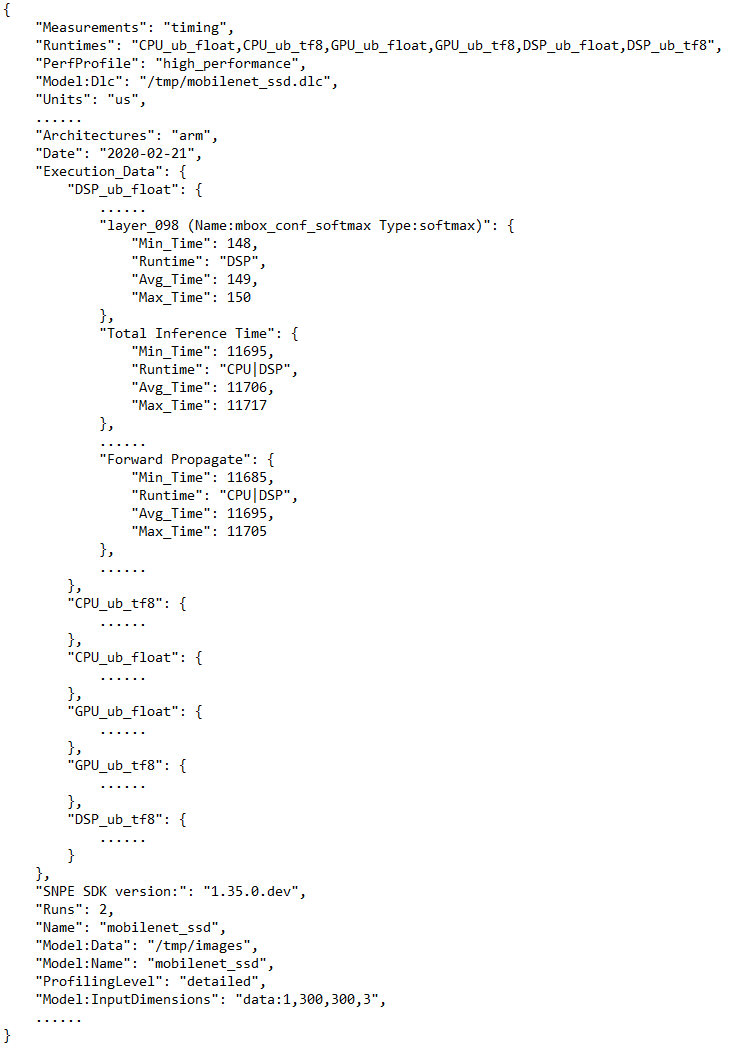

JSON Benchmark Results File

The benchmark results published in the CSV file can also be made available in JSON format. Run the benchmark via

python snpe_bench.py -c /tmp/mobilenetssd.json -a --generate_json

The contents are the same as in the CSV file, structured as key-value pairs, and will help parsing the results in a simple and efficient manner. The JSON file contains results similar to the example below.